Master Project Topics

Below we outline current topics for master projects that can be taken alone or as group. If you are interested in learning more about a topic, please contact the person assigned to that topic or visit us at our offices.

You can also contact our team members for more potential topics or if you want to suggest a topic yourself!

How can large language models (LLMs) change the way robots and drones learn to move and make decisions?

Contact: Dr. Alper Yegenoglu

Please find details about the project here.

How do birds fly with such agility and precision - even through dense forests or turbulent winds? Can we capture the essence of this natural intelligence and transfer it to unmanned aerial vehicles (UAVs)?

Contact: Dr. Alper Yegenoglu

Please find details about the project here.

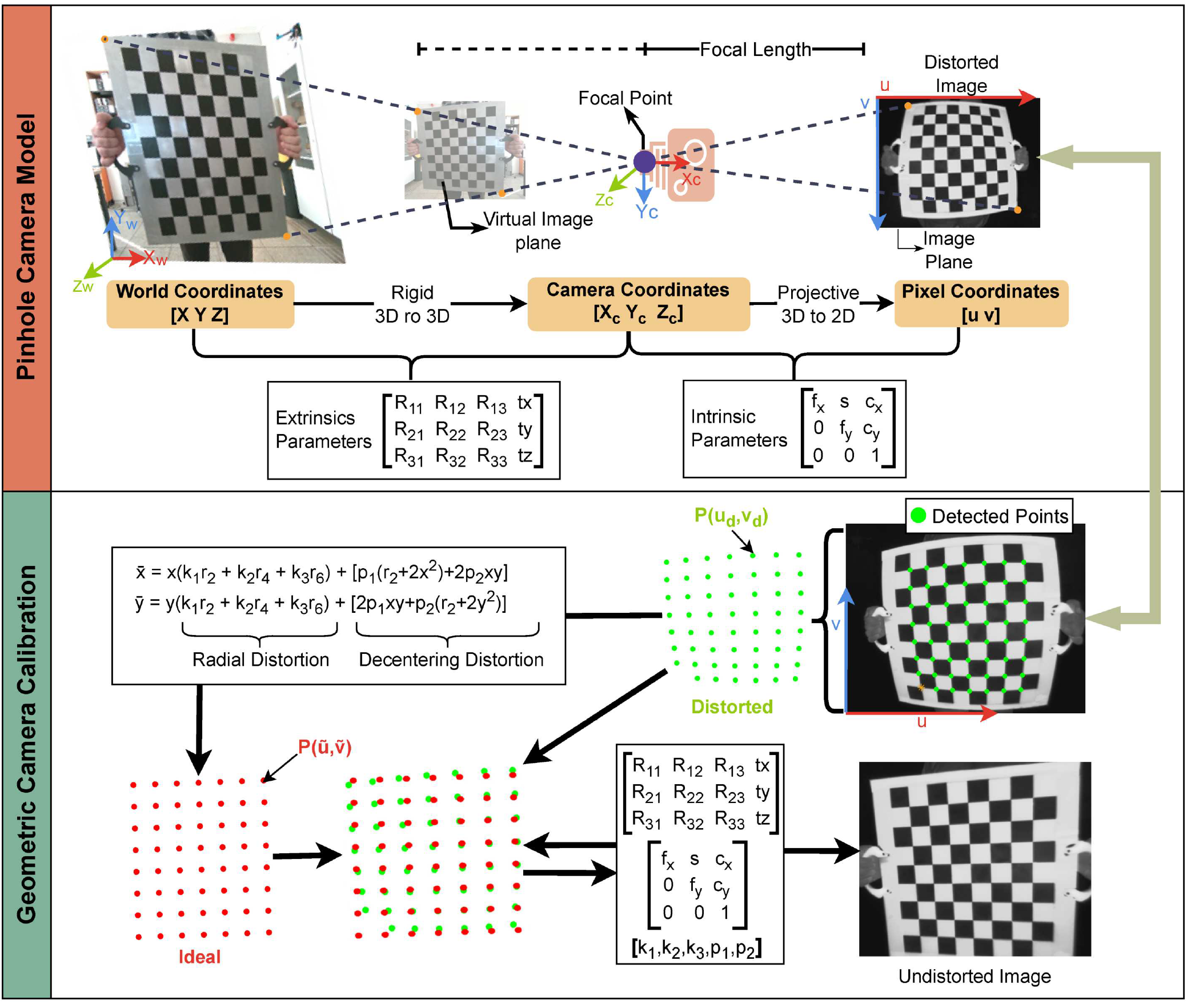

In this project, each group member will be assigned a different standard SLAM algorithm, such as ORB-SLAM3 or VINS-Mono. The calibration parameters (intrinsics and extrinsics) will be provided and explained in advance, allowing students to focus directly on understanding and experimenting with their assigned algorithm.

The first phase will involve running the algorithms in a ROS environment using pre-recorded datasets. This will allow students to become familiar with the pipeline, its inputs and outputs, and the general behavior of the system under controlled conditions. (ROS environment can also be introduced to students by a supervisor, using some basic SLAM algorithm examples.)

In the second phase, the focus will shift to real-time deployment. We will provide access to our existing drone platform, where each group will integrate and test their assigned SLAM algorithm in live experiments. The drone environment will be set up and prepared beforehand to ensure safe and repeatable trials.

Finally, all groups will jointly evaluate the results. The comparison will cover accuracy, robustness, and runtime performance under different scenarios (e.g., fast motion, low light, indoor, and outdoor environments). The purpose of the project is not to develop new algorithms, but rather to gain a deep understanding of how state-of-the-art SLAM systems function in practice, their strengths and weaknesses, and how they behave when deployed on real robotic hardware.

Contact: Sebnem Sariozkan

Please find details about the project here.

Differentiable physics simulators (DiffSim) are revolutionizing how we approach learning in robotics. By equipping physics engines with automatic differentiation, they make it possible to compute pathwise gradients— an alternative to the traditional score-function gradient that is typically unbiased and enjoys much lower variance.

The primary objective of the project course is to develop scripts for modeling real robots using Warp as a DiffSim framework, which can then be utilized for the creation of policy learning algorithms.

Contact: M.Sc Quang Dung Dinh

Please find details about the project here.

This project focuses on designing and implementing a handheld data collection device that integrates multiple complementary sensors, including a thermal camera, an RGB-D camera, an event camera, an IMU, and a LiDAR, all running on an NVIDIA Jetson platform for real-time data acquisition. To ensure reliable evaluation, ground-truth pose information will be provided by external tracking systems such as Vicon or a dedicated tracking camera. The multimodal data gathered with this device will be used for critical tasks such as sensor calibration, temporal and spatial alignment, and depth estimation, which are essential steps toward building robust UAV navigation systems. By working on this project, students will not only develop practical expertise in multimodal sensor integration and synchronization, but also gain valuable experience in embedded platforms, calibration techniques, and systematic data collection. These skills form a solid foundation for advancing research in autonomous systems and can directly contribute to master’s thesis work or further exploration in the field of UAV navigation and sensor fusion.

Contact: Hürkan Sahin

Please find details about the project here.

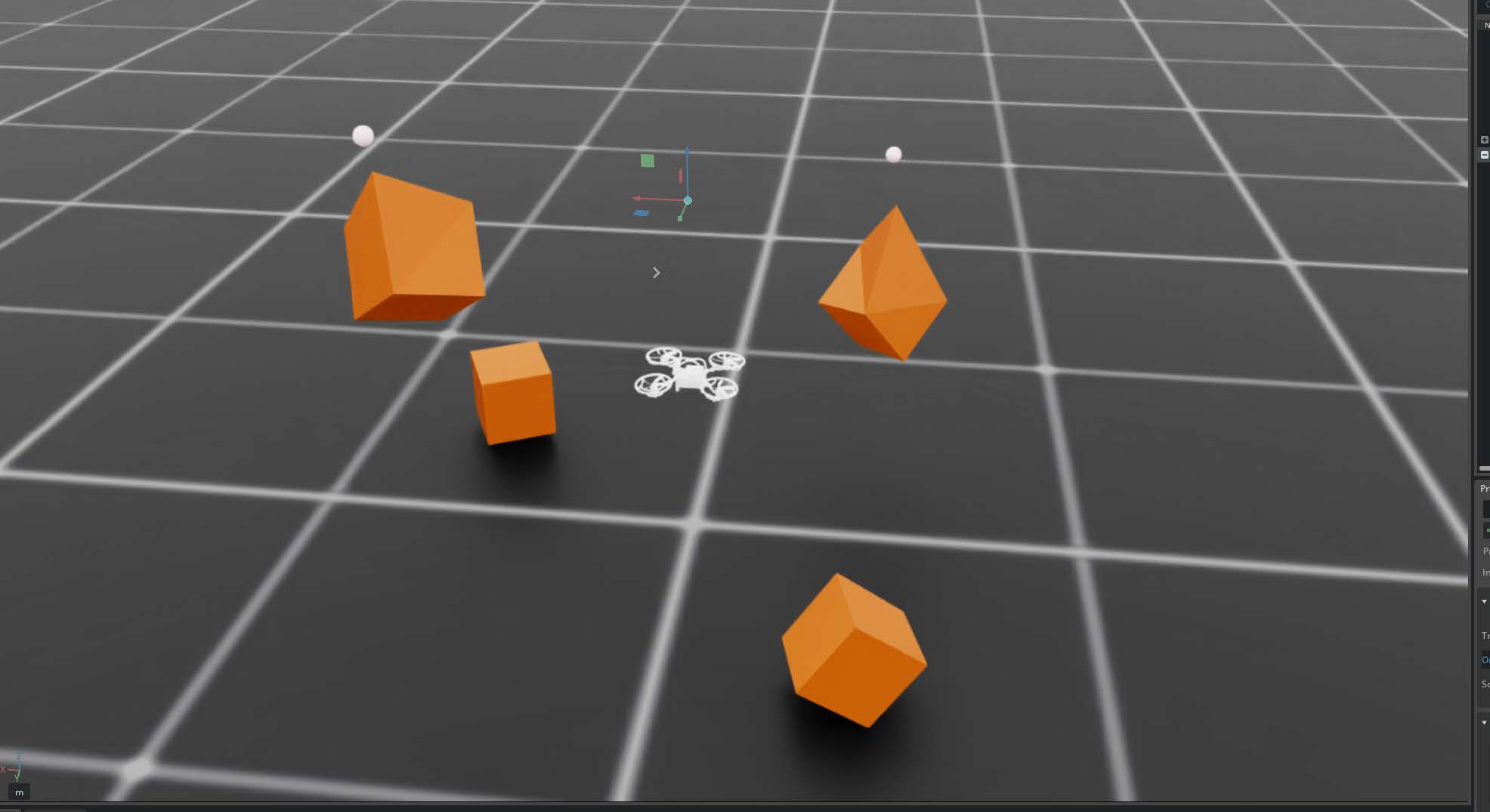

Generative AI is transforming how robots—especially drones—learn to navigate and interact with their environments. Diffusion models stand out among its most promising techniques, creating new motion paths by converting random noise into structured movement. Like a sculptor gradually revealing form from marble, these models transform randomness step by step into innovative, practical trajectories.

However, this creativity introduces a significant challenge. Trajectories that appear smooth and efficient in theory may actually pass through obstacles, exceed physical capabilities, or create safety hazards for people and equipment.

This project challenges students to address a cutting-edge question in robotics: How can we leverage generative AI's creative potential while guaranteeing that drone trajectories remain safe, reliable, and physically feasible in real-world conditions?

Contact: Erdi Sayar

In this project, you will to develop a deep reinforcement learning (DRL) framework that exploits proprioceptive signals for adaptive control of soft aerial robot.

Contact: Vikas Chidananda, M.Sc.

Please find details about the project here.