Research page of the Automatic Control Group (RAT)

Here you will find the core research directions and projects of our group member, who seek to keep up with the rapid pace of developments in areas such as artificial intelligence, machine learning, computer vision, cloud systems, cyber-physical systems as well as availability of low-cost processors and various sensors. With these developments, robotics and autonomous systems have been a game changer in academia and industry. Several companies invest billions of dollars in robotics, and the society has already started discussions on how they can adapt themselves to the revolutionary research activities in robotics and artificial intelligence.

Our ultimate goal: Smarter robots

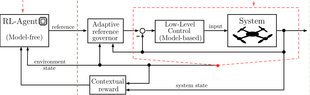

Our primary research interests and contributions lie within the areas of control systems, reinforcement learning, computational intelligence and robotic vision with applications in guidance, control and automation of unmanned ground and aerial vehicles. In today’s world, it is not sufficient to design autonomous systems that are able to repeat a given task in repetitive manner. We must leverage state-of-the-art autonomy level towards smarter systems that will learn and interact with their environment, collaborate with people and other systems, plan their future actions and execute multiple time-varying tasks accurately.